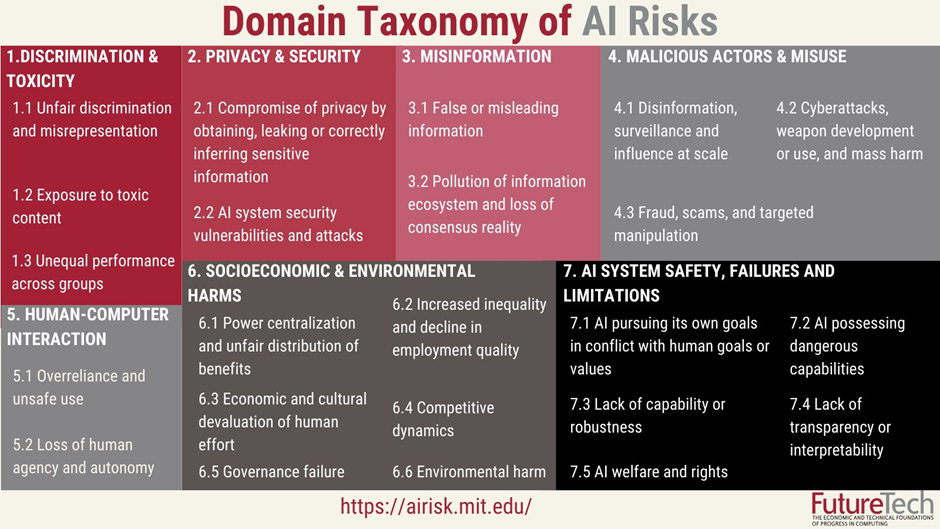

Scientists at one of the world’s leading universities have created a threat list warning of the risks posed by artificial intelligence.

Researchers at the Massachusetts Institute of Technology (MIT) have published an AI Risk Repository which lists over 700 different ways in which AI could threaten society.

From job displacement and privacy violations to the potential for mass destruction, MIT describes the repository as the most comprehensive catalogue of AI risks to date.

The most alarming risks outlined in the database are the potential for AI to be weaponised by criminals, rogue states, or even terrorist groups.

AI systems designed for harmless applications, such as drone delivery or therapy, could be repurposed for violent means, according to the report.

MIT researchers suggests that “an algorithm designed to pilot delivery drones could be re-purposed to carry explosive charges, or an algorithm designed to deliver therapy that could have its goal altered to deliver psychological trauma,” illustrating the real danger of AI falling into the wrong hands.

The report also explores the role of AI being used in warfare and although some experts argue that AI-driven drones could lead to casualty-free battles, others warn that the technology is not far from enabling mass violence.

The report states: “Escalation of such conflicts could lead to unprecedented violence and death, as well as widespread fear and oppression among populations targeted by mass killings.”

Peter Slattery, project lead and a researcher at MIT, said: “By identifying and categorising risks, we hope that those developing or deploying AI will think ahead and make choices that address or reduce potential exposure before they are deployed.”

MIT warns that AI poses significant risks to privacy and fairness. The institute describes the concern that AI technologies can be used to collect, analyse, and exploit personal data, often without individuals’ consent.

The study states that large AI systems trained on vast amounts of data are increasingly likely to embed and misuse personal information, such as names, addresses, and even medical records. The intrusion into private life, facilitated by AI, raises ethical concerns about how personal data is being harvested and used.

The potential for AI to spread and amplify bias is another risk highlighted by MIT. As the report notes that AI algorithms can reflect the biases present in the data they are trained on.

The report adds that such a development could lead to widespread discrimination, disproportionately affecting marginalised groups.

The study also considers a future where AI biases shape people’s lives, whether they receive a loan, a job offer, or a fair trial.

The study also highlights the digital divide created by AI technologies. The technology, often developed by large tech companies, are more accessible to wealthier individuals.

The report states: “AI assistant technology has the potential to disproportionately benefit economically richer individuals who can afford to purchase access.” This creates a digital divide, where wealthier individuals enjoy enhanced productivity and opportunities, while lower-income individuals remain reliant on outdated or inefficient methods. The inequality, according to the report, extends beyond mere convenience—it affects everything from job applications to healthcare, and even social interactions.

Another risk listed in the database is the impact of AI on the job market. MIT state: “AI systems are increasingly automating many human tasks, potentially leading to significant job losses.”

The Institute for Public Policy Research suggests that up to eight million jobs in the UK—roughly 25 per cent of the workforce—could be at risk due to AI.

Entire sectors, from transportation to public safety, are likely to see major shifts as AI systems take over human roles, according to the report. MIT warns of a future where AI agents compete against humans for jobs, leading to economic displacement and social inequality.

The researchers state that the threat of AI automation can create exploitative dependencies between workers and employers. The report states that to compete with AI, “human workers may be pressured to accept lower wages, fewer benefits, and poorer working conditions.”