In the wild world of digital content, we’re facing a serious reality check – turns out, we’re pretty terrible at spotting deepfake videos and dodgy audio. Recent research shows that we can only identify deepfakes in videos about half the time, and individuals scored a not-so-impressive 73% in catching fake audio. It’s not just a tech problem; it’s a human one.

Think you’re a pro at telling what’s real? Well, think again. Another study shows we’re way too confident in our deepfake-detecting skills. Surprisingly, we’re more likely to believe a deepfake is real than the other way around. The study also shows that we thinking we’re way better at spotting these sneaky fakes than we actually are.

This research highlights the prevalence of a “seeing-is-believing” heuristic in deepfake detection. Paired with overconfidence, it makes individuals easy targets for deceptive content. We have below outlined a guide on spotting deepfakes, arming you with key indicators to remain vigilant and distinguish authentic content from manipulative creations.

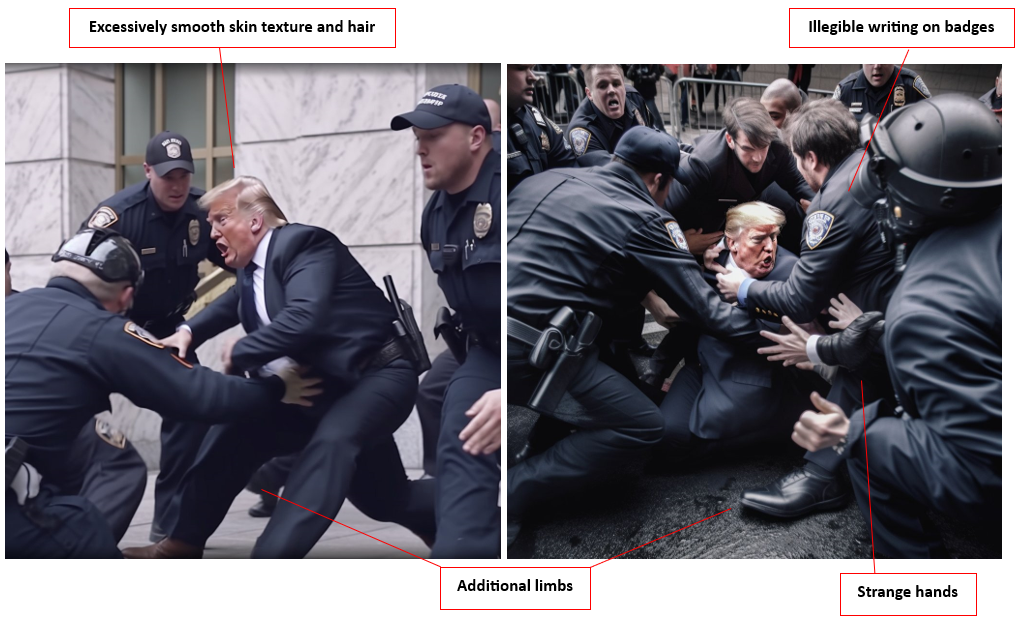

When it comes to AI-manipulated media, identifying a fake isn’t always straightforward. However, there are key things to look out for in still images or video:

- Inconsistencies in Facial Features: Look for irregularities such as mismatched facial expressions, unnatural eye movements, or unusual lighting that may indicate manipulation.

- Inconsistent Audio Quality: Pay attention to audio clarity, as deepfakes may exhibit discrepancies in voice quality, tone, or lip-syncing issues.

- Unnatural Backgrounds: Examine the surroundings for distorted or unnatural backgrounds that may not align with the context of the video or the individual’s usual environment.

- Blurred Edges and Artifacts: Observe for blurred edges, pixelation, or artifacts around the subject, especially in fast movements, which may indicate tampering. Also, take note of the skin’s texture – does it seem overly smooth or excessively wrinkled? Ensure the aging of the skin aligns with that of the hair and eyes.

- Blinking and Facial Movements: Deepfakes might struggle to replicate natural blinking or facial movements, so check these aspects for inconsistencies.

- Eye Contact and Gaze: Analyse eye contact and gaze direction to ensure it aligns with the context and behaviour typical of the person in question.

- Unusual Lighting and Shadows: Deepfakes may have difficulty replicating realistic lighting conditions and shadows, so be cautious of any discrepancies in these elements.

- Verify the Source: Check the authenticity of the source and verify if the video or content is shared by reliable platforms, as deepfakes often originate from less reputable sources.

- Contextual Analysis: Assess the overall context of the video and whether the actions, statements, or events depicted align with the individual’s known behaviour and circumstances.

- Consult Deepfake Detection Tools: Leverage online tools or applications designed to detect deepfakes for an added layer of verification and analysis.

In March 2023, fake images of Former President Donald Trump made rounds on social media, racking up 6.8 million views. While they could trick you at first glance, a closer look quickly exposes their artificial nature.

When it comes to audio deepfakes, these are much harder to detect, especially as technology advances, this task becomes even more challenging. It is more difficult as there are fewer contextual cues available for detection. Often, familiarity with the individual’s speech patterns is essential for identification but even then, we can be fooled. Monitoring for anomalies such as jarring or improbable word choices can help in uncovering manipulation.

Test Yourself: Explore “Detect Fakes,” a research initiative hosted at Northwestern University by scholars from the Kellogg School of Management. Users can assess their ability to spot fake images from a library of 301 images.

Tools: You can fight back against AI-generated content by using AI tools yourself. There are plenty out there, both open-source and paid, that can help you spot different types of deepfake media. Just keep in mind, they’re not foolproof. Sometimes they might be off the mark or have their own limitations. Still, here are a few free tool suggestions: AI or Not, Deepware, and ElevenLabs’ AI speech classifier (can only detect clips created with ElevenLabs tools).

Taking a moment to notice the deception amidst the flood of deepfake content is key. By staying alert and critically assessing what we encounter online, we can better protect ourselves from misinformation while upholding the standards of digital integrity.