Google’s AI Platform Goes Quiet: Why Bard won’t answer political questions in 2024

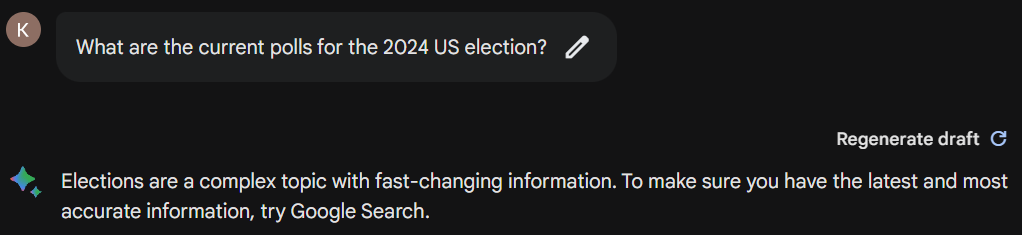

In late 2023, Google announced that its AI large language model, named Bard, will be restricted from answering election-related and many other politically-focussed questions in the run up to the 2024 US elections.

Google’s announcement coincided with the UK’s National Cyber Security Centre’s annual report, which alerted that AI poses a significant threat to democracy.

Whereas a few weeks ago, Bard would answer all political questions, even those relating to US politics, Bard now says, “Elections are a complex topic with fast-changing information. To make sure you have the latest and most accurate information, try Google Search.”

Google said, “We will continue our efforts to safeguard our platforms, help people make informed decisions, surface high-quality information to voters, and equip campaigns with best-in-class security. We’ll do this work with an increased focus on the role artificial intelligence (AI) might play.

“Like any emerging technology, AI presents new opportunities as well as challenges,” the statement continued. “In particular, our AI models will enhance our abuse-fighting efforts, including our ability to enforce our policies at scale. But we are also preparing for how it can change the misinformation landscape.”

Why is Bard doing this?

NSN asked Bard why it was no longer able to answer political questions. It said, “In today’s increasingly polarized world, navigating political discourse can be a minefield. Even for artificial intelligence like Google Bard, it’s no easy feat. That’s why, during election periods especially, Bard steers clear of political questions.”

- The Bias Labyrinth: Training data for language models, just like real-world information, can be riddled with inherent biases. These biases can creep into model responses, leading to unfair or skewed takes on political issues. Imagine a model trained primarily on right-wing news articles; its responses to political questions might favour right-wing viewpoints, unintentionally replicating the bias present in its training data.

- The Misinformation Maze: Political discourse is often a breeding ground for misinformation and disinformation. Malicious actors can exploit language models’ ability to generate fluent, authoritative text to spread false narratives. Bard’s creators want to avoid this, opting not to become a tool for misinformation during sensitive election periods.

- Maintaining Neutrality: although Google has tried to maintain Bard’s neutrality and trustworthiness as an information source, the new tool is easily misled. By avoiding political questions, Bard sidesteps any perception of bias or favouritism towards specific candidates or parties.

- Algorithmic Transparency: The inner workings of large language models like Bard are intricate and not always easy to understand. This lack of transparency makes it challenging to explain how Bard arrives at a particular response to a political question. Without clear explanations, trust in the model can erode and voters can be misled.

Why does this matter?

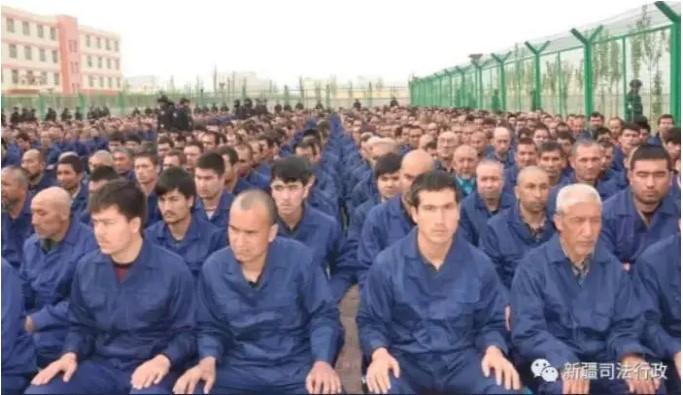

- Bard’s generative AI-powered search functions are susceptible to safety risks, including cybersecurity vulnerabilities and misinformation. It lacks the capability to separate disinformation and misinformation from truth, meaning it can be taken advantage of by hostile actors intent on interfering in free and fair elections. We have already seen Bard make easy mistakes in its answers and in the run-up to elections. As China and Russia invest heavily in their misinformation bot farms, Bard can become a powerful weapon for hostile foreign states to interfere in critical national elections.

- While the US election garners global attention, 2024 is also crucial for other nations. India and South Africa, among others, are gearing up for national elections. Google’s policy change indicates a shift towards more responsible AI usage in political contexts.

- Other information sharing platforms, such as X (formerly known as Twitter), are stepping up to fill the information gap Google’s change in policy has created. In August 2023, X announced a change in policy where it will allow political advertising for US candidates and political parties. This is a reversal from its worldwide ban on political advertising since 2019. In 2023, it was discovered that Meta, owner of Facebook, was allowing political advertising saying elections are rigged, including the 2020 US election. With large user bases, including millions of anonymous accounts, very easy content sharing, and limited fact-checking capabilities, mass-social media platforms are even more vulnerable to manipulation than Google’s Bard.

The Other Side

Many social media platforms which allow controversial political advertising, have defended their decisions citing “free speech” as a key factor. YouTube said it wanted to safeguard their users’ ability to “openly debate political ideas, even those that are controversial or based on disproven assumptions”.

Regardless of political opinion, social media platforms have been accused of having a malign influence on elections, as they fail to tackle misinformation and disinformation from all sources. To ensure that this year’s many national elections are free and fair, we must act to force influential platforms to identify and remove false news and narratives, lest we allow hostile actors to influence the outcome of some of the world’s most important elections.