Global Crackdown on Election Deepfakes: Latvia Proposes New Criminal Law, Australia Calls for Stronger Regulations

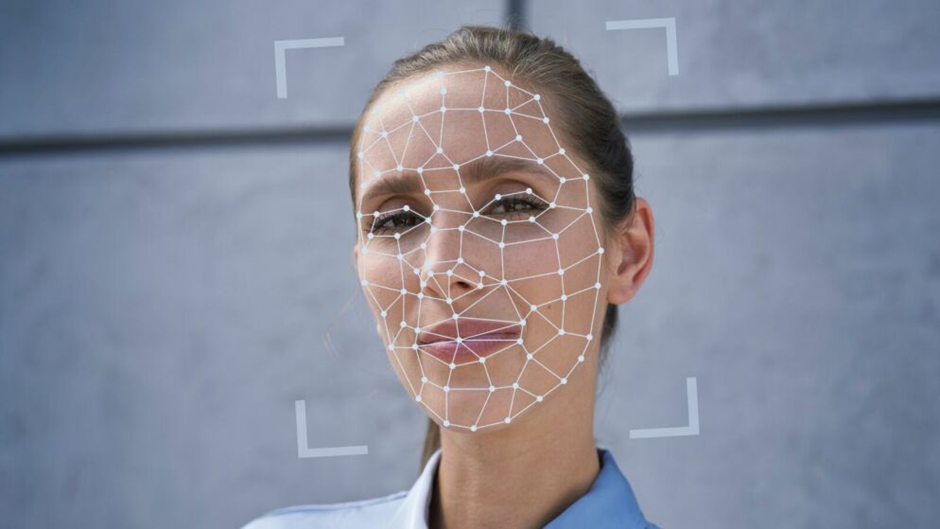

Latvia could make the use of deepfakes in political campaigns illegal. President Edgars Rinkēvičs has proposed a law that would make the use of deepfake technology to spread false information about political candidates a criminal offense, punishable by up to five years in prison. This proposal reflects growing concerns that such technology is being weaponised to undermine democratic processes.

In a proposed amendment to the Criminal Law, Rinkēvičs wrote, “Deliberately producing or disseminating false discrediting information about candidates for the position of the highest state officials in connection with the process of election, appointment or confirmation of these officials, using deepfake technology, can cause significant or even irreversible damage to the national and international interests of the Latvian state.”

Among fears of increasing misuse of AI-generated content globally, Latvia is just one country taking decisive steps to protect its electoral integrity and uphold democratic principles in the face of evolving technological threats. By doing so, Latvia aims to keep pace with the rapidly advancing capabilities of AI technologies and their potential misuse. The disclosure reflects the growing concern among Western governments that fake imagery could be weaponised to undermine the critical democratic process. This worry comes after the UK announced that it would be holding a general election on July 4th, which could also be impacted by deepfakes.

As the conversation on AI regulation heats up, the European Union has also taken a significant step forward. EU ministers recently gave final approval to the groundbreaking AI Act, marking the world’s first major law for regulating artificial intelligence, which is set to go into effect in June. This landmark legislation doesn’t ban deepfakes entirely, but instead focuses on controlling them through mandatory transparency requirements for creators.

Other countries are beginning to have conversations about how deepfakes and other AI technologies could impact their own elections, highlighting a growing international concern.

Over in Australia, at a recent parliamentary committee on AI, electoral commissioner Tom Rogers highlighted the challenges posed by AI-generated content. Rogers said “The Australian Electoral Commission does not possess the legislative tools, or internal technical capability, to deter, detect or adequately deal with false AI-generated content concerning the election process,” stated Rogers, expressing scepticism about the prospects of significant change before the upcoming federal election.

“Countries as diverse as Pakistan, the United States, Indonesia and India have all demonstrated significant and widespread examples of deceptive AI content,” noted Rogers, stressing the urgent need for regulatory reform to address this growing threat domestically.

Australian Senator David Shoebridge also emphasises the need for regulators to have more authority to remove deepfake content, concerned that voters might be tricked by AI content that becomes more realistic by the month.

The evolving threat landscape is a moving target for governments due to the rapid development of AI. While regulators may recognise potential risks, implementing necessary controls proves difficult, with different countries implementing their own regulations with varying degrees of effectiveness. With the integrity of electoral processes at stake, the global response to deepfakes highlights the urgent need for comprehensive measures to safeguard democracy against digital deception.