The UK continues to cement its position as a global leader in AI safety with the launch of Inspect, the world’s first state-backed AI safety testing platform made available for free public use. Developed by the UK AI Safety Institute, Inspect empowers a wide range of users – from startups to international governments – to evaluate AI models for potential security risks.

With super-powered AI models poised to hit the market this year, the need for robust safety measures has never been greater. Untested AI poses a risk of biased decisions, unforeseen accidents, and loss of control. “Inspect” tackles this head-on by providing a standardised way to assess AI safety, sparking global collaboration thanks to its open source access, and ultimately paving the way for trustworthy AI everyone can rely on.

Inspect: A Collaborative Effort for Secure AI

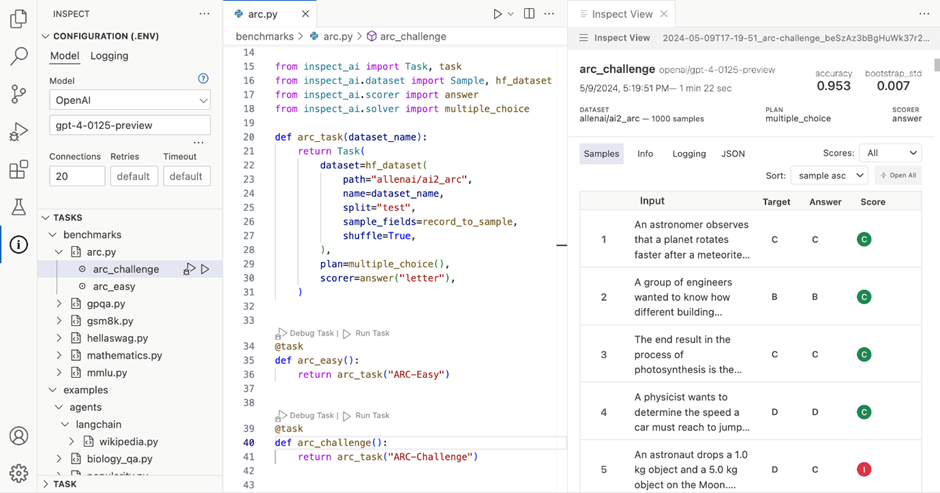

Inspect functions as a software library, allowing testers to assess specific capabilities of individual AI models and generate a safety score. This evaluation covers core knowledge, reasoning abilities, and autonomous functionalities, providing a thorough assessment of potential risks.

The open-source nature of Inspect is important as it’s a key driver of collaboration in safer AI.

Ian Hogarth, Chair of the AI Safety Institute, posted on X, “One of the structural challenges in AI is the need for coordination across borders and institutions. I believe academia, start-ups, large companies, government and civil society to all play a role, and open source can be a mechanism to coordinate more broadly.”

Hogarth pointed to the existing model of open-source software as a prime example. Companies like Alibaba in China are already using Meta’s open-source large language model on their cloud platform. “Perhaps this points at another mechanism for international collaboration over safety,” he added.

By freely sharing this platform with the global AI community, the UK creates a collaborative environment where researchers, developers, and government agencies can work together to refine and improve AI safety testing methodologies. This not only benefits individual actors but also promotes the development of more robust and universally applicable safety standards.

“As part of the constant drumbeat of UK leadership on AI safety, I have cleared the AI Safety Institute’s testing platform – called Inspect – to be open sourced,” declared Secretary of State for Science, Innovation and Technology Michelle Donelan. “This puts UK ingenuity at the heart of the global effort to make AI safe, and cements our position as the world leader in this space.”

Beyond Inspect: A Commitment to Continuous Improvement

The launch of Inspect marks just the beginning of the UK’s commitment to AI safety.

In conjunction with the release, it was announced that the AI Safety Institute, Incubator for AI (i.AI), and Number 10 will assemble a team of leading AI talent. This initiative focuses on the rapid development of new open-source AI safety tools, further strengthening the global security toolkit.

The UK’s leadership in AI safety presents a significant opportunity to mitigate national security risks associated with potential AI vulnerabilities. By spearheading international collaboration and fostering a culture of open-source innovation, the UK is well-positioned to ensure the responsible and secure development of this powerful technology.